RBF support vector machine on such wide data. The dimensionality of the data is 4096 and it is slow to train an Of components associated with lower singular values.įor instance, if we work with 64圆4 pixel gray-level pictures Space that preserves most of the variance, by dropping the singular vector It is often interesting to project data to a lower-dimensional

Input data for each feature before applying the SVD. Number of samples to be processed in the dataset.Īs in PCA, IncrementalPCA centers but does not scale the Memory usage depends on the number of samples per batch, rather than the In order update explained_variance_ratio_ incrementally. IncrementalPCA only stores estimates of component and noise variances, Using its partial_fit method on chunks of data fetched sequentiallyįrom the local hard drive or a network database.Ĭalling its fit method on a sparse matrix or a memory mapped file using Out-of-core Principal Component Analysis either by: IncrementalPCA makes it possible to implement Processing and allows for partial computations which almostĮxactly match the results of PCA while processing the data in a The IncrementalPCA object uses a different form of The biggest limitation is that PCA only supportsīatch processing, which means all of the data to be processed must fit in main The PCA object is very useful, but has certain limitations for Model selection with Probabilistic PCA and Factor Analysis (FA) Score method that can be used in cross-validation:Ĭomparison of LDA and PCA 2D projection of Iris dataset

Probabilistic interpretation of the PCA that can give a likelihood ofĭata based on the amount of variance it explains. Strong assumptions on the isotropy of the signal: this is for example the caseįor Support Vector Machines with the RBF kernel and the K-Means clusteringīelow is an example of the iris dataset, which is comprised of 4įeatures, projected on the 2 dimensions that explain most variance: This is often useful if the models down-stream make

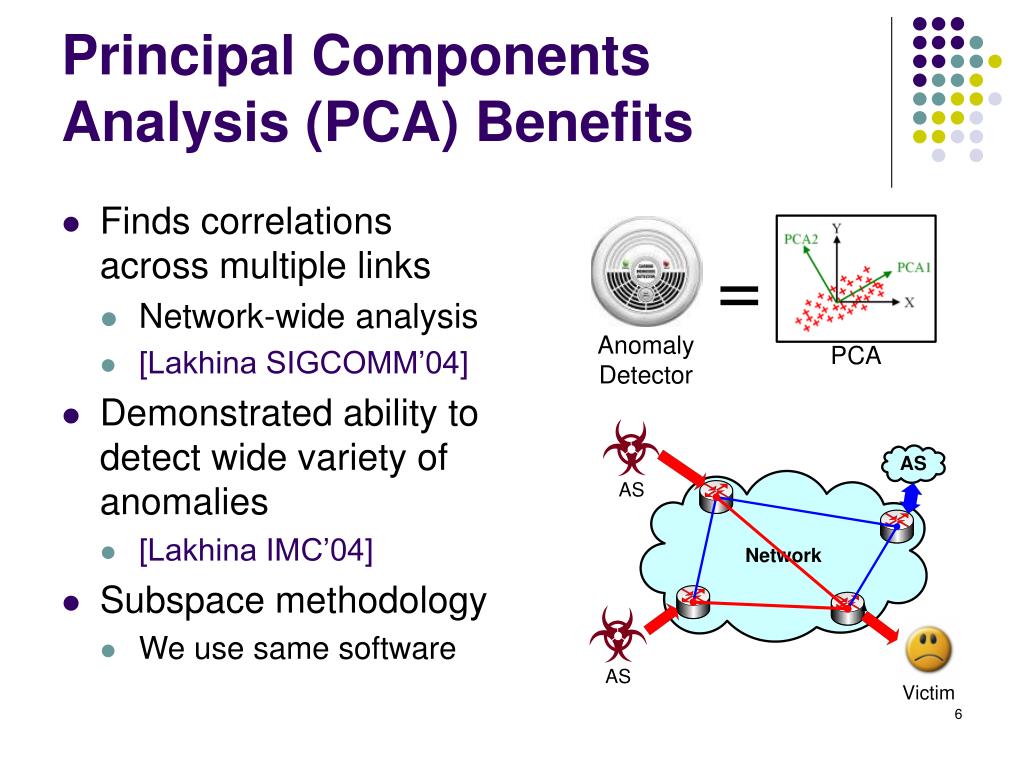

Possible to project the data onto the singular space while scaling eachĬomponent to unit variance. The optional parameter whiten=True makes it PCA centers but does not scale the input data for each feature beforeĪpplying the SVD. That learns \(n\) components in its fit method, and can be used on new Scikit-learn, PCA is implemented as a transformer object Orthogonal components that explain a maximum amount of the variance. PCA is used to decompose a multivariate dataset in a set of successive Exact PCA and probabilistic interpretation ¶ Principal component analysis (PCA) ¶ 2.5.1.1. Decomposing signals in components (matrix factorization problems) ¶ 2.5.1.

0 kommentar(er)

0 kommentar(er)